Digital Media started with the digitisation of audio, video and images. But Digital Media is not confined to audio, video and images. So the MPEG-4 mission has been about implementing a plan to add more and more media types: composition, characters and font information, 3D Graphics etc. Other standards, like MPEG-7 and MPEG-21 have added other aspects of Digital Media. 3D Graphics, in particular, has led to very significant examples of “virtual worlds” some giving rise to new businesses, as was the case of much-hyped Second Life where people, numbering millions at one time, could create their own alter ego living in a virtual space with its economy wher “assets” could be created, bought and sold.

Often digital media content may also need to stimulate senses other than the traditional sight and hearing involved in common audio-visual media. Examples are olfaction, mechanoreception (i.e. sensory reception of mechanical pressure), equilibrioception (i.e. sensory reception of balance ), or thermoception (i.e. sensory reception of themperature), some of which have become common experience in handsets . These additions to the audio-visual content (movies, games etc.) involve other senses that enhance the feeling of being part of the media content to create a user experience that is expected to provide greater value for the user.

Virtual worlds are used in a variety of contexts (entertainment, education, training, getting information, social interaction, work, virtual tourism, reliving the past ecc.) and have attracted interest as important enablers of new business models, services, applications and devices as they offer the means to redesign the way companies interact with other entities (suppliers, stakeholders, customers, etc.).

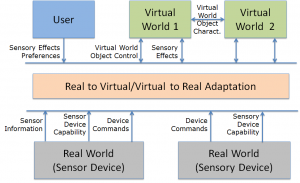

The “Media context and control” standard, nicknamed MPEG-V, provides an architecture and the digital representation of a wide range of data types that enable interoperability between virtual worlds – e.g., a virtual world set up by a provider of serious games and simulations – and between the real world and a virtual world via sensors and actuators. Figure 1 below gives an overview of the MPEG-V scope.

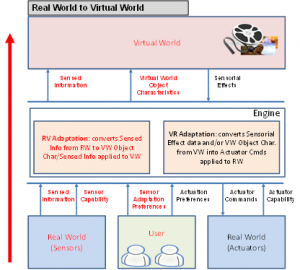

Figure 2 shows which technologies are standardised by MPEG-V in the interaction between virtual to real and between real to virtual interaction.

Figure 2 – A more detailed MPEG-V model for virtual-to-real and real-to-virtual world interaction

In the MPEG-V model described in Fig. 1 the virtual world receives Sensor Effects that have been generated by some sensor and generates Sensory Effects that eventually control some actuators. In general, however, the format of the information entering or leaving the virtual world is different than the one used by actuators and sensors, respectively. For example, the virtual world may wish to communicate a “cold” feeling but the real world actuators may have different physical means to realise “cold”. The user can specify how Sensory Effects should be mapped to Device Commands and Devices can represent their capabilities: for instance, it is not possible to change the room temperature, but it is possible to activate a fan.

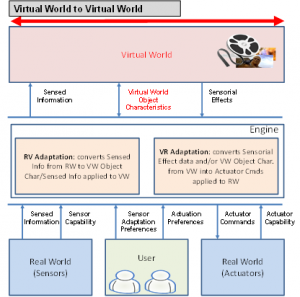

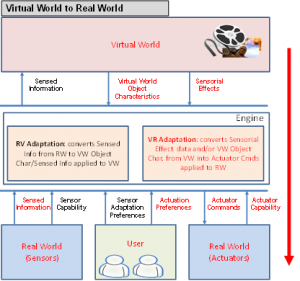

Figure 3 describes the role of “Virtual world object chacateristics” standard provided by Part 4 of MPEG-V.

Figure 3 – A more detailed MPEG-V model for virtual-to-virtual world interaction

Figure 4 provides a specific example illustrating some technologies specified in MPEG-V Part 3 “Sensory Information”, namely 1) the Sensory Effect Description Language (SEDL) enabling the description of “sensory effects” (e.g. light, wind, fog, vibration, etc.) that act on human senses, 2) the Sensory Effect Vocabulary (SEV) defining the actual sensory effects and allowing for extensibility and flexibility and 3) Sensory Effect Metadata (SEM), a specific SEDL description that may be associated to any kind of multimedia content to drive sensory devices (e.g. fans, vibration chairs, lamps, etc.).

Figure 4– Use of MPEG-V SEDL for augmented user experience