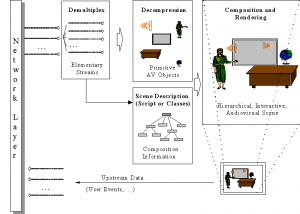

Many papers and books explain the MPEG-4 standard using the figure below, originally contributed by Phil Chou, then with Xerox PARC. This page will be no different :-).

We will use the case of a publisher of technical courses who expects to do a better job by using MPEG-4 as opposed to what was possible in the mid 1990s using a DVD as distribution medium.

Such a publisher would hire a professional presenter to show his slides and would make videos of him. The recorded lessons would be copied on DVD and distributed. If anything changed, e.g. the publisher wanted to make the version of a successful course in another language, another presenter capable of speaking that particular language would be hired, the slides would be translated and a new version of the course would be published.

With MPEG-4, however, a publisher could reach a wider audience while cutting distribution costs.

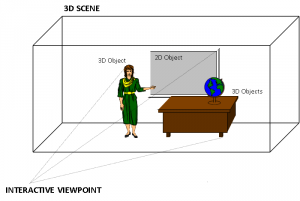

Figure 1 – A 3D scene suitable for MPEG-4

The standing lady is the presenter, making her lecture using a multimedia presentation next to a desk with a globe on it. In the example, the publisher makes video clips of the professional presenter while she is talking, but this time the video is made while she has a blue screen as background. The blue screen is useful because it is possible to extract just the shape of the presenter using “chroma key”, a well-known technique used in television to effect composition. The presenter’s voice is recorded in a way that it is easy to dub it and translate the audio-visual support material in case a multilingual edition of the course is needed. There is no need to change the video.

Having the teacher as a separate video sprite, a professional designer can create a virtual set made up of a synthetic room with some synthetic furniture and the frame of a blackboard that is used to display the audio-visual support material. In the figure above there are the following objects:

- presenter (video sprite)

- presenter (speech)

- multimedia presentation

- desk

- globe

- background

With these it is possible to create an MPEG-4 scene composed of audio-visual objects: static “objects” (e.g. the desk that stays unchanged for the duration of the lesson) and dynamic “objects” (e.g. the sprite and the accompanying voice, and the sequence of slides).

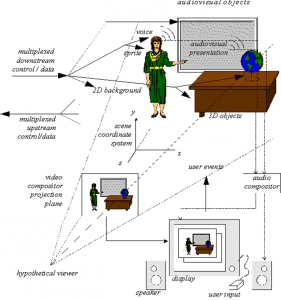

Figure 2 – An MPEG-4 scene

Of course an authoring tool is needed so that the author can place the sprite of the presenter anywhere it is needed, e.g. near the blackboard and then store all the objects and the scene description in the MP4 File Format. This presentation may be ‘local’ to the system containing the presentation, or may be via a network or another stream delivery mechanism. The file format is also designed to be independent of a particular delivery protocol but enables efficient support for delivery in general.

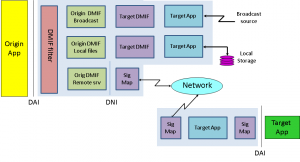

At the end user side we assume that a subscriber to the course, after completing some forms of payment or authentication (outside of the MPEG-4 standard), can access the course. To do this, the first thing that needs to be done is to set up a session between the client and the server. This is done using DMIF, the MPEG-4 session protocol for the management of multimedia streaming. When the session with the remote side is set up, the streams that are needed for the particular lesson are selected and the DMIF client sends a request to stream them. The DMIF server returns the pointers to the connections where the streams can be found, and finally the connections are established. Then each audio-visual object is streamed using a virtual channel called Elementary Stream (ES) through the Elementary Stream Interface (ESI). The functionality provided by DMIF is expressed by the DAI as in the figure below, and translated into protocol messages. In general different networks use different protocol messages, but the DAI allows the DMIF user to specify the Quality of Service (QoS) requirements for the desired streams.

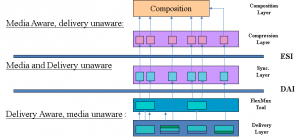

Figure 3 – The 3 layers in the MPEG-4 stack

The “TransMux” (Transport Multiplexing) layer offers transport services matching the requested QoS. However, only the interface to this layer is specified because the specific choice of the TransMux is left to the user. The specification of the TransMux itself is left to bodies that are responsible for the relevant transport, with the obvious exception of MPEG-2 TS, whose body in charge is MPEG itself. The second multiplexing layer is the M4Mux, which allows grouping of ESs with a low multiplexing overhead. This is particularly useful when there are many ESs with similar QoS requirements, each possibly with a low bitrate. In this case it is possible to reduce the number of network connections, the transmission overhead and the end-to-end delay.

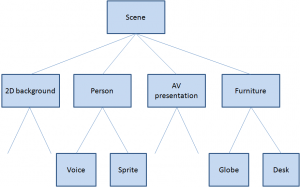

The special ES containing the Scene Description plays a unique role. The Scene Description is a graph represented by a tree, like in the figure below that refers to the scene used in Figure 2.

Figure 4 – An MPEG-4 scene graph

With reference to the specific example, at the top of the graph we have the full scene with four branches: the background, the person, the audio-visual presentation and the furniture. The first branch is a “leaf” because there is no further subdivision, but the second and fourth are further subdivided. The object “person” is composed of two media objects: a visual object and an audio object (the lady’s video and voice). The object “furniture” is composed of two visual objects, the desk and the globe. The audio-visual presentation may be itself another scene. The ESs carry the information corresponding to the individual “leaves”, they are decompressed by the appropriate decoders and composed in a 3D space using information provided by the scene description.

The other important feature of the DAI is the provision of a uniform interface to access multimedia contents on different delivery technologies. This means that the part of the MPEG-4 player sitting on top of the DAI is independent of the actual type of delivery: interactive networks, broadcast and local storage. This can be seen from the figure below. In the case of a remote connection via the network there is a real DMIF peer at the server, while in the local disk and broadcast access cases there is a simulated DMIF peer at the client.

Figure 5 – DMIF independence from delivery mechanism

In the same way MPEG-1 and MPEG-2 describe the behaviour of an idealised decoding device along with the bitstream syntax and semantics, MPEG-4 defines a System Decoder Model (SDM). The purpose of SDM is to define precisely the operation of the terminal without unnecessary assumptions about implementation details that may depend on a specific environment. As an example there may be devices receiving MPEG-4 streams over isochronous networks, while others will use non-isochronous means (e.g. the internet). The specification of a buffer and timing model is essential to design encoding devices that may be unaware of what the terminal device is or how it will receive the encoded stream. Each stream carrying media objects is characterised by a set of descriptors for configuration information, e.g. to determine the precision of encoded timing information. The descriptors may carry “hints” to the QoS required for transmission (e.g. maximum bit rate, bit error rate, priority, etc.).

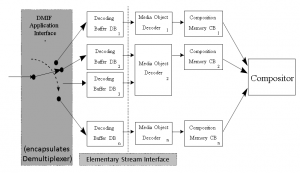

ESs are subdivided in Access Units (AU). Each AU is time stamped for the purpose of ES synchronisation. The synchronisation layer manages the identification of such AUs and the time stamping. ESs coming from the demultiplexing function are stored in Decoding Buffers (DB) and the individual Media Object Decoders (MOD) read the data from there. The Elementary Stream Interface (ESI) is located between DBs and MODs, as depicted in Fig. 5.

Figure 6 – The MPEG-4 decoder model

The functions of an MPEG-4 decoder are represented in Figure 7.

Figure 7 – Functions of an MPEG-4 decoder model

Depending on the viewpoint selected by the user, the 3D space generated by the MPEG-4 decoder is projected onto a 2D plane and rendered: the visual part of the scene is displayed on the screen and the audio part is generated from the loudspeakers. The user can hear the lesson and view the presentation in the language of his choice by interacting with the content. This interaction can be separated into two major categories: client-side interaction and server-side interaction. Client-side interaction involves locally handled content manipulation, and can take several forms. In particular, the modification of an attribute of a scene description node, e.g. changing the position of an object, making it visible or invisible, changing the font size of a synthetic text node, etc., can be implemented by translating user events, such as mouse clicks or keyboard commands, to scene description updates. The MPEG-4 terminal can process the commands in exactly the same way as if they had been embedded in the content. Other interactions require sending commands to the source of information using the upstream data channel.

Imagine now that the publisher has successfully entered the business of selling content on the web, but one day he discovers that his content can be found on the web for people to enjoy without getting it from the publisher. The publisher can use MPEG-4 technology to protect the Intellectual Property Rights (IPR) related to his course.

A first level of content management is achieved by adding the Intellectual Property Identification (IPI) data set to the coded media objects. This carries information about the content, type of content and (pointers to) rights holders, e.g. the publisher or other people from whom the right to use content has been acquired. The mechanism provides a registration number similar to the well established International Standard Recording Code (ISRC) used in CD Audio. This is a possible solution because the publisher may be quite happy to let users freely exchange information, provided it is known who is the rights holder, but for other parts of the content, the information has greater value so that higher-grade technology for management and protection is needed.

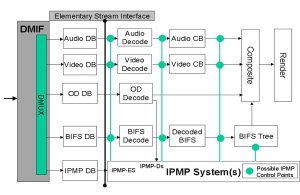

MPEG-4 has specified the MPEG-4 IPMP interface allowing the design and use of domain-specific IPMP Systems (IPMP-S). This interface consists of IPMP-Descriptors (IPMP-D) and IPMP-Elementary Streams (IPMP-ES) that provide a communication mechanism between IPMP-Ss and the MPEG-4 terminal. When MPEG-4 objects require management and protection, they have IPMP-Ds associated with them to indicate which IPMP-Ss are to be used and provide information about content management and protection. It is to be noted that, unlike MPEG-2 where a single IPMP system is used at a time, in MPEG-4 different streams may require different IPMP-Ss. Figure 8 describes these concepts.

Figure 8 – The MPEG-4 IPMP model

MPEG-4 IPMP is a powerful mechanism. As an examples it allows to “buy” the right to use certain content already in protected form from a third party.

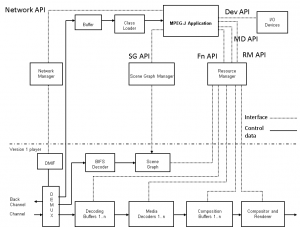

Another useful feature to make content more interesting is to add programmatic content to the scene. The technology used is called MPEG-J, a programmatic system (as opposed to the purely declarative system described so far). This specifies APIs to enable Java code to manage the operation of the MPEG-4 player. By combining MPEG-4 media and executable code, one can achieve functionalities that would be cumbersome to achieve just with the declarative part of the standard (see figure below).

Figure 9 – MPEG-J model

The lower half of this drawing represents the parametric MPEG-4 Systems player also referred to as the Presentation Engine. The MPEG-J subsystem controlling the Presentation Engine, also referred to as the Application Engine, is depicted in the upper half of the figure. The Java application is delivered as a separate elementary stream to the MPEG-J run time environment of the MPEG-4 terminal, from where the MPEG-J program will have access to the various components and data of the MPEG-4 player.