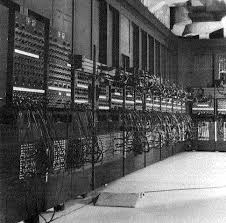

Electronic computers have been a great invention indeed and the progress of the hardware that has enabled the building of machines that get smaller and smaller year after year while, at the same time, they get more powerful in processing data, has been and continues to be astonishing. In 70 years we have moved from machines that could be be measured in cubic metres and tons (the first ENIAC weighed more than 30 tons) and needed special air-conditioned rooms,

Figure 1 – A corner of the ENIAC computer

to devices that can be measured in cubic millimetres and grams and can be found in cars, domestic appliances or are even worn by people. At the same time the ability to process data has improved by many orders of magnitude.

Unlike most other machines that can be used as soon as they are physically available, however, before one can use a computer for a purpose one must prepare a set of instructions (a “program”). This may be easy to do if the program is simple, but may require a major effort if the program involves a long series of instructions with branching points that depend on the state of the machine, its environment and user input. Today there are programs of such a complexity that just the cost of developing them can only be adequately measured in hundreds of million if not billions USD.

So, from the early days of the electronic computer age, writing programs has invariably been a very laborious process. In the early years, when computers were programmed using so-called “machine language”, particular training was required because programs consisted entirely of numbers that had to be written by a very skilled programmer. This was so awkward that it prompted the early replacement of machine language with “assembly language”, with the same structure and command set of a machine language, but with the possibility to use names expressed by characters to represent instructions and variables instead of numbers. As a result, long binary codes, difficult to remember and decode, could be replaced by mnemonic programming codes.

New “high-level” programming languages, such as COBOL, FORTRAN and Pascal were developed after the assembly languages. These not only enabled computer programming in a language that was more similar to a natural language, but were to a large extent independent of the particular type of computer and could therefore be used, possibly with minor adaptations, on other computers. Of course every type of computer had to have a “compiler”, i.e. a specially designed program capable of converting the program’s “high-level” instructions into a program written in machine or assembly language specific of the environment.

Because of the very high demand for programs from a variety of application domains and the long development times that increasing customer demands placed on developers, there has always been a strong incentive to improve the effectiveness of computer programming. One way to achieve this was through the splitting of monolithic programs into blocks of instructions called “modules” (also called routines, functions, procedures, etc.). These could be independently developed and even become “general purpose”, i.e. they could be written in a way that would allow re-use in other programs to perform a specific function. Using modules, a long and unwieldy program could become a slim and readable “main” (program) that just made “calls” to such modules. Obviously a call had to specify the module name and in most cases the call to a module contained the parameters, both input and output, that were passed between calling program and module, and vice-versa. Each module could be “compiled”, i.e. converted to machine language, for a particular computer type and combined with the program at the time of “linking”.

An often obvious classification of modules is between “system level” and “application level”. The former type of module is so called because their purpose is more generic, say, to get data from a keyboard, print a line of text on an output device, etc. The latter type of module is so called because its purpose is more application specific.

From very early on, the collection of “system level” routines was called an Operating System (OS). At the beginning, an OS was a small collection of very basic routines. These were obviously specific to the architecture, maker and model of the computer and were written entirely in assembly language. In fact the low processing power of the CPUs of that time made optimisation of execution an issue of primary importance. As a consequence, the hardware vendor usually supplied the OS with the machine. Later on, OSs became very large with support of advanced features, such as time-sharing, that were offered even with small (for that time) machines, like minicomputers. The importance of the OS and related programs grew constantly over time and, already in the 1970s, machines were often selected more for the features of the OS than for the hardware.

A major evolution took place in the 1970s, when the Bell Labs developed a general purpose OS called UNIX. The intention was to make an OS that would overcome the tight dependence of the OS on the machine that had prevailed until that time. That dependence could be reduced, however, but not eliminated. A natural solution was to split the OS in two: the first part (“lower” in an obvious hierarchy, starting from the hardware) was called “kernel” and contained instructions that were specific to the computer, the second “higher” part was “generic” and could be used in any computing environment. Such a rational solution quickly made UNIX popular and its use spread widely, particularly in the academic environment. Early UNIX support of networking functions and in particular of the Internet Protocol (IP) was beneficial for the adoption of both the internet and UNIX. Today there are several variations of UNIX that are utilised by a range of computers of different makes. One of them is Linux, an Open Source re-writing of UNIX started by Linus Thorvalds, which is playing a more and more important role in the future for many computing devices.

In 1975 William Gates III, then aged 19, dropped out of Harvard and founded a company called Microsoft with his friend Paul Allen. They wrote a version of the BASIC programming language for the Altair 8800 microcomputer and put it on the market. In 1980, IBM needed an OS for its PC whose design was progressing fast. After trying with Digital Research, an established software company that owned Control Program for Microcomputers (CP/M), then a well-known OS in PCs of various makes, IBM selected the minuscule (at that time) Microsoft to develop an operating system for its PC. As he did not have an OS ready, Gates bought, for 50,000 USD, all the rights to 86-DOS, an OS for the Intel 8080 written by Seattle Computer Products, rewrote it, named it DOS and licensed it to IBM. Gates obtained from his valued customer the right to retain ownership of what was to become known as Microsoft Disk Operating Systems (MS-DOS), a move that allowed him to control the evolution of the most important PC OS. MS-DOS evolved though different versions (2.11 being the most used for some time in the mid-1980s).

In 1995, after some 10 years of development, Microsoft released Windows 95, in which MS-DOS ceased to be the OS “kernel” and became available, for users who still needed it, only as a special program running under the new OS. Another OS started by Microsoft without MS-DOS backward compatibility constraints of Windows 95 and called New Technology (NT), was later given the same user interface as Windows 95, thereby becoming indistinguishable to the user. Other OSs of the same family are Windows 98 (based on Windows 95), Windows 2000 and Windows XP (both based on Windows NT), Vista, Windows 7, Windows 8 and Windows 10. Microsoft also developed a special version of Windows, called Windows CE, for resource-constrained devices and Windows Mobile for mobile devices like cell phones. The latest move is the convergence of all devices – PCs, Tablets and Smartphones – to Windows 10 using the same user interface.

A different approach was taken by Sun Microsystems (now part of Oracle) in the first half of the 1990s when it became clear that IT for the CE domain could not be based on the paradigms that had ruled for the previous 50 years of IT, because application programs had to run on different machines. Sun defined a Virtual Machine (VM), i.e. an instruction set for a virtual processor, with a set of libraries that provided services for the execution environment, and called this “Java”. Different profiles of libraries were defined so that Java could be run in environments with different capabilities and requirements. Java became the early choice for program execution in the web browser, but has been later gradually displaced by a scripting language called ECMA Script (aka Javascript) whose capabilities and hardware progress promise to make it a universal language also for resource demanding applications.

The situation described above provides the opportunity to check once more the validity of another of the holy cows of market-driven technology, i.e. that competition makes technology progress. In this general form the statement may be true or untrue and may be relevant or irrelevant. Linux is basically the rewriting of an OS that was designed 40 years ago and is now finding more and more application in MacOS and Android, both very successful OSs based – at different degrees – on Linux. Windows was able to achieve today’s performance and stability thanks to the revenues from the MS-DOS franchise that let the company invest in a new OS for 10 years and keep on doing so for another 20 years.

The problem I have with the statement “competition makes technology progress” lies with the unqualified “let competition work” policy, because this forces people to compete on every single element of the technology, leads to a gigantic dispersion of resources because every competitor has to develop all the elements of the technology platform from scratch, actually reduces the number of entities that can participate in the competition, thus excluding potentially valid competitors and eventually produces an outcome of a quality that is not comparable with the (global) investment. Therefore, unless a company has huge economic possibilities, like IBM used to have in hardware, Microsoft has in software and Apple has in hardware and software, and Google has in software (until it leverages hardware as well) it can perfect no or very few aspects of them. The result is then a set of poor solutions that the market is forced to accept. Users – those who eventually foot the software development bill – are the ones that fund its evolution. There is progress, but at a snail pace, and confined to very few people. Eventually, as the PC OS case shows, the market finds itself largely locked into a single solution controlled by one company. The solution may eventually be good (as a PC user for more than 30 years, I can attest that it is or – better – that it used to be good for some time), but at what cost!

Rationality would instead suggest that some basic elements of the technology platform – obviously to be defined – should be jointly designed so that a level playing field is created on which companies can compete in the provision of good solutions for the benefit of their customers without having to support expensive developments that do not provide a significant added value, distract resources from achieving the goals that matter and result in an unjustified economic burden to end users.

With the maturing of the business of application program development, it was quite natural that such programs would start being classified as “low level”, such as a compiler and “high level”. Writing programs became more and more sophisticated and new program development methodologies were needed.

The modularisation approach described above represented the “procedural” approach to programming because the focus is on the “procedures” that have to be “called” in order to solve a particular problem. Structured programming, instead, has the goal of ensuring that the structure of a program helps a programmer understand what the program does. A program is structured if the control flow through the program is evident from the syntactic structure of the program. As an example, structured programming inhibits use of “GOTO” a typical instruction that is used to branch a program depending on the outcome of a certain event, and one that typically makes the program less understandable.

A further evolution in programming was achieved towards the mid 1980s with object orientation. An “object” is a self-contained piece of software consisting of both the data as well as the procedures needed to manipulate the data. Objects are built using a specific programming language for a specific execution environment. Object oriented programming is an effective methodology because programmers can create new objects that inherit some of their features from existing objects, again under the condition that they are for the particular programming language and the particular execution environment.

After OSI, a new universality devil – objects – struck the IT world again. Yet another general architecture was conceived by the Object Management Group (OMG) – the Common Object Request Broker Architecture (CORBA). The intention behind this architecture was to enable objects to communicate with one another regardless of the programming language used to write them and the operating system they were running on, and included object location and host hardware. This functionality had to be provided by an Object Request Broker (ORB), a middleware (a software layer) that manages communication and data exchange between objects and allows a client to request a service without knowing anything about what servers are attached to the network. The various ORBs receive the requests, forward them to the appropriate servers, and then hand the results back to the client.

Since CORBA does not look inside objects because it deals with the interfaces, it needs a language to specify interfaces. The OMG developed Interface Definition Language (IDL), a special object oriented language that programmers can use to specify interfaces. CORBA allows the use of different programming languages for clients and objects, which can execute on different hardware and operating systems, and the clients and objects cannot detect these details about each other.

It clearly seems like a programmer’s dream come true: you keep on living in the (dream) world you have created for yourself and somebody else will benevolently take care of smoothing out the differences between yours and other people’s (dream) worlds.

The reality, however, is quite different. A successful exploitation of the CORBA concepts can be found in Sun’s Remote Method Invocation (RMI). This enables Java objects to communicate remotely with other Java objects, but not with other objects. Microsoft with its Component Object Model (COM) and Distributed Component Object Model (DCOM) created another successful exploitation of CORBA concepts for any programming language, but only in the Windows environment, where programmers can develop objects that can be accessed by any COM-compliant applications distributed across a network. The general implementation of the CORBA model, however, finds it much harder to make practical inroads.

CORBA is, after OSI, another attempt at solving a problem that cannot be solved because of the way the IT world operates. To reap the benefits of communication, people must renounce some freedom (remember my definition of standard) to choose programming and execution environments. If no one agrees to cede part of his freedom, all the complexity is put in some magical central function that performs all the necessary translation. Indeed, yet another OSI dream…

Of course if this does not happen it is not because IT people are dull (they are smart indeed), but because doing things any differently than the way they do would make proprietary systems talk to one another. And this is exactly what IT vendors have no intention of doing.

Going back to my group at CSELT, a big investment was made in CORBA when we developed the ARMIDA platform because the User-to-User part of the DSM-CC protocol is based on it. That experience prompted me to try and do something in this area that concerned this layer of software called middleware.