| Current chapter | Next section | Next chapter | |

| ToC | The Early Communication | Compressed Digital Is Better | The Early Digital Communication |

Both audio and video signals can be represented as waveforms. The number of waveforms corresponding to a signal is 1 for telephone, 2 for stereo music and 3 for colour television. While it is intuitively clear that an analogue waveform can be represented to any degree of accuracy by taking a sufficiently large number of samples of the waveform, it was the discovery of Harry Nyquist, a researcher at the Bell Labs in the 1920s,that formalised as the Nyquist theorem bearing his name, that a signal with a finite bandwidth of B Hz can be perfectly – in a statistical sense – reconstructed from its samples, if the number of samples per second taken on that signal is greater than 2B. Bell Labs used to be the research centre of the Bell System that included ATT, the telephone company operating in most of the USA and Western Electric, the manufacturing arm (actually it was the research branch of Western Electric’s engineering department that became the Bell Labs).

Figure 1 – Signal sampling and quantisation

Around the mid 1940s it became possible to build electronic computers. These were machines with thousands of electronic tubes designed to make any sort of calculations on numbers expressed in binary form based on the sequence of operations described in a list of instructions, called “program”. For several decades the electronic computer was used in a growing number of fields: science, business, government, accounting, inventory etc., in spite of the guess of one IBM executive in the early days that “the world would need only 4 or 5 such machines”.

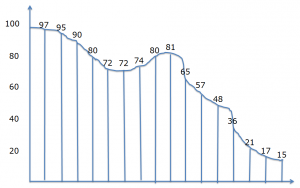

Researchers working on audio and video saw the possibility of eventually using electronic computers to process samples taken from waveforms. The Nyquist theorem establishes the conditions under which using samples is statistically equivalent to using the continuous waveforms, but samples are in general real numbers, while digital electronic computers can only operate on numbers expressed with a finite number of digits. Fortunately, another piece of research carried out at Bell Labs showed that, given a statistical distribution of samples, it is possible to calculate the maximum number of levels – called quantisation levels – that must be used to represent signal samples so that the power of the error generated by the imperfect digital representation stays below a given value.

So far so good, but this was “just” the theory. The other, practical but no less important, obstacle was the cost and clumsiness of electronic computers of that time. Here another invention of the Bell Laboratories – the transistor – created the conditions for another later invention – the integrated circuit. This is at the basis of the unstoppable progress of computing devices, also known as Moore’s law, that has allowed making more powerful and smaller integrated devices, including computers, by reducing the size of circuit geometry on silicon (i.e. how close a “wire” of a “circuit” can be close to a “wire” of another circuit).

It is nice to think that Nyquist’s research was funded by enlightened Bell Labs managers who foresaw that one day there could be digital devices capable of handling digitised telephone signals. Such devices would be used for two fundamental functions of the telecommunication business. The first is moving bits over a transmission link, the second is routing (switching) bits through the nodes of a network. Both these functions – transmission and switching – were performed by the networks of that time but in analogue form.

The motivations were less exciting but no less serious. The problem that plagued analogue telephony was its unreliability, because analogue equipment performance tends to drift with time, typically because electrical component deteriorate slowly with time. A priori, it is not particularly problematic to have a complex system like the telephone network subject to error – nothing is perfect in this world – the problem is when a large number of small random drifts add up in unpredictable ways and the performance of the system degrades below acceptable limits without being able to point the finger to a specific cause. More than by the grand design suggested above, the drive to digitisation was caused by the notion that, if signals were converted into ones and zeroes, one could create a network where devices either worked or did not work. If this was achieved, it would have been possible to put in place procedures that would make spotting the source of malfunctioning easier. Correcting the error would then easily follow: just change the faulty piece.

In the 1960s the CCITT made its first decision on the digitisation of telephone speech: a sampling frequency of 8 kHz and 8 bits/sample for a total bitrate of 64 kbit/s. Pulse Code Modulation (PCM) was the name given to the technology that digitised signals. But digitisation had an unpleasant by-product: conversion of a signal into digital form with sufficient approximation creates so much information that transmission or storage requires a much larger bandwidth or capacity than the one required by the original analogue signal. This was not just an issue for telecommunication, but also for broadcasting and Consumer Electronics, all of which used analogue signals for transmission – be it on air or cable – or storage, using some capacity-limited device. For computers this was not an issue – just yet – because at that time audio and video were a data type that was still too remote from practical applications if in digital form.

The benefits of digitisation did not extend just to the telco industry. Broadcasting was also a possible beneficiary because the effect of distortions on analogue radio and television signals would be greatly reduced – or would become more manageable – by conversion to digital form. The problem was again the amount of information generated in the process. Digitisation of a stereo sound signal (two channels) by sampling at 48 kHz with 16 bits/sample, one form of the so-called AES/EBU interface developed by the Audio Engineering Society (AES) and the European Broadcasting Union (EBU), generates 1,536 kbit/s. Digitisation of television by sampling the luminance information at 13.5 MHz and each of the colour-difference signals at 6.75 MHz (this subsampling can be done because the eye is less demanding on colour information accuracy), generates 216 Mbit/s. Dealing with such high bitrates required special equipment that could only be used in the studio.

In the CE domain, digital made a strong inroad at the beginning of the 1980s when Philips and Sony on the one hand, and RCA on the other, began to put on the market the first equipment that carried bits with the meaning of musical audio to consumers’ homes in the form of a 12-cm optical disc. After a brief battle, the Compact Disc (CD) defined by Philips and Sony prevailed over RCA’s. The technical specification of the CD was based on the sampling and quantisation characteristics of stereo sound: 44.1 kHz sampling and 16 bits/sample for a total bitrate of 1.41 Mbit/s. For the first time end-users could have studio-quality stereo sound in their homes at a gradually affordable cost, providing the same quality no matter how many times the CD was played, something that only digital technologies could make possible.

| Previous chapter | Next section | Next chapter | |

| ToC | A guided tour | Compressed Digital Is Better | The Early Digital Communication |