| Previous chapter | Next chapter | |

| ToC |

| Previous chapter | Next section | Next chapter | |

| ToC | Software And Communication | Technology Challenging Rights | More About Rights and Technologies |

My sudden, although not unplanned, departure from DAVIC gave me the opportunity to realise some ideas I had been mulling over since some time. With MPEG and DAVIC I had dealt with machines that process data in a sophisticated, well-programmed way, but as intermediaries between humans or, at most, between humans and machines. What if the machines themselves processed (à la MPEG-7) and exchanged data autonomously – of course within the purview set by humans – and with other machines? That was a bold undertaking, that would possibly involve some new industries beyond those I had become acquainted to work with, but I thought that I had developed a good recipe for creating technical standards enabling new opportunities from unexpected directions.

A few days after the conclusion of the DAVIC meeting in December 1995 in Berlin I was already deep in Christmas holidays and had advanced quite substantially in the development of the idea. In a letter to some friends at the beginning of January 1996 I disclosed the first ideas of the mission of a new organisation that I called Foundation for Intelligent Physical Agents (FIPA):

Important new domains of economic activity that retain growth in the 1990’s and beyond can be created by assembling technologies from different industries, as in the case of multimedia. Intelligent Physical Agents, i.e. devices for the mass market, capable of executing physical actions under the instruction of human beings, with a high degree of intelligence, will be possible if existing technologies are drawn from the appropriate industries and integrated. The integration task will be executed by an organisation, operating under similar rules as MPEG and DAVIC, where interested parties can jointly specify generic subsystems and the supporting generic technologies.

In the period between January to March 1996, documents were circulated to a selected number of individuals who contributed to the refinement of the basic ideas. Gradually the discussion focused on the idea of “agents”, entities that reside in environments where they interpret “sensor” data that reflect events in the environment, process them and execute “motor” commands that produce effects in the environment.

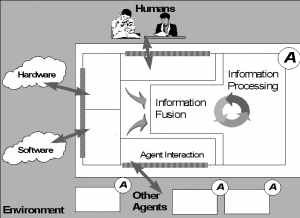

The notion of “agents”, namely software entities with special characteristics, had been explored by the academic community for a number of years. Fig. 1, drawn by Abe Mamdani of Imperial College, gives a pictorial representation of this concept.

Figure 1 – A model for agents

The letter A represents an agent, an entity that can interact with the environment, constituted of software (possibly interfaced with hardware), other agents and humans. The different sources of information are “fused” and processed, possibly to further interact with the environment.

Agents are charcaterised by a number of feartures:

| Feature | Description: able to |

| Autonomous | Operate without the direct intervention of humans |

| Social | Interact with other agents and/or humans |

| Reactive | Perceive the environment and respond to changes occurring in it in a timely fashion |

| Pro-active | Exhibit goal-directed behaviour by taking the initiative |

| Mobile | Move from one enviroment to another |

| Time-continuous | Run processes continuously, not “one-shot” computations that terminate |

| Adaptive | Adapt automatically to changes in their environment |

Richard Nicol, whom I had worked with during 8 years in the COST 211 project where he was chairman of the Hardware Subgroup, in the H.100 series of CCITT Recommendations and in the first phases of the Okubo group, was at that time the deputy director of research at British Telecom Laboratories (BTL). In his organisation he had set up a sizeable research group working on agents and responded favourably to my proposal to host the first meeting. This was held at Imperial College in London in April 1996 and was attended by 35 people from 7 countries.

The meeting agreed that it was desirable to establishan international organisation tasked to develop generic agent standards based on the participation of all players in the field, developed the basic principles of generic agent standardisation, and agreed on a work plan calling for a first specification of a set of agent technologies to be achieved at the end of 1997.

IBM hosted the second meeting at their TJ Watson Research Center in Yorktown, NY. The meeting was attended by 60 people and organised as a workshop with some 40 presentations dealing with the different areas of “Input/Output primitives”, “Agents”, “Communication” and “Society of Agents”. The meeting output was a framework document for FIPA activities, preliminary drafts of FIPA applications and requirements, and a list of agent technologies that were candidates for a 1997 specification.

As a result of one of the Yorktown resolutions, in September 1996 five individuals met in Geneva in the office of Me Jean-Pierre Jacquemoud, the same who had handled the papers for the registration of DAVIC, to sign the statutes establishing FIPA as a not-for-profit association under Swiss Civil Law, much as had been done for DAVIC and would be done later for M4IF. According to the work plan, the third meeting was held in Tokyo in October and hosted by NHK. This was expected to produce a Call for Proposals and the fact that this did indeed happen in the short span of 5 work days (and nights) was a remarkable achievement.

The submissions received from attendees were used to identify twelve different application areas of interest, out of which 4 were retained: Personal Assistant, Personal Travel Assistance, Audio-Visual Entertainment and Broadcasting, and Network Provisioning and Management. From the applications the list of agent technologies needed to support the four applications was identified. Then the list of technologies was restricted to those for which specification in a year’s time was considered feasible. Finally, the general shape of applications made possible by FIPA 97 was described. At the end of this long marathon, the CfP was drafted, approved and released.

All this had been interesting, and personally rewarding because the same spirit that drives the work of MPEG had been recreated in an environment with people from very different backgrounds and with almost no overlap with MPEG and DAVIC. But that was just the preparation for the big match at the January 1997 meeting, hosted by CSELT in Turin. The 19 submissions received provided sufficient material to start the work, in particular it was possible to select a proposal for Agent Communication Language (ACL), but a second CfP was issued because some more necessary technologies were identified.

During a set of exciting one-week long meetings held at quarterly intervals and at alternating international locations, FIPA succeeded in developing the first series of specifications – called FIPA 97 – and ratifying them in October 1997 at the Munich meeting hosted by Siemens.

The achievement of this first milestone had immediate responses from industry. Several companies began developing FIPA 97-conforming platforms or adapting their previous development to the FIPA 97 specification. Some of the platforms have been made open source and have acquired a large number of followers and users. At the January 1999 meeting in Seoul, FIPA conducted the first interoperability trials. Four companies, one of them being CSELT, with different hardware, operating systems, ORBs and languages, successfully tested interoperability and compatibility of their agent platforms by connecting them in a single LAN.

The following year another set of specifications was developed and approved as FIPA 98 in October at the Durham, NC meeting hosted by IBM.

One year later, at the October 1999 meeting in Kawasaki hosted by Hitachi, I was again confronted with a new concentration of commitments, particularly coming from SDMI, that demanded a large share of my time. I decided not to stand for re-election at the Board of Directors.

A major rewrite of past FIPA specifications was carried out and approved as FIPA2000. A new specification, started in April 1999, called Abstract Architecture has been developed of which the rewriting of FIPA 97 as FIPA 2000 is a “reification”.

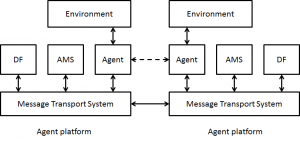

I would like to spend a few words describing the content of the FIPA standard and how this can benefit the wide range of communities that were the original FIPA target. FIPA is essentially a communication standard that specifies how agents and Agent Platforms (AP) communicate between themselves. To achieve this there is a need to specify a model of an AP. In Fig. 2 one sees that an AP is composed of 4 entities:

- one or more agents

- an Agent Management System (AMS)

- one or more Directory Facilitators (DF)

- one or more Message Transport Systems (MTS).

Fig. 2 – Communicating agents and agent platforms

One should not confuse an AP with a single computing environment: it may very well be that two or more APs reside on the same computer or that the AP is distributed on a number of computers.

According to FIPA, an agent is an active software entity designed to perform certain functions, e.g. interacting with the environment and communicating with other agents via the ACL. The AMS is the agent that supervises access to and use of AP resources, maintains a directory of resident agents (so-called white pages) associating logical agent identifiers to state and address information, and handles the agents’ life cycle. The DF is an agent providing yellow page services with matchmaking capabilities.

So the major elements of the FIPA standard are three specifications:

- AP specification

- MTS (APs communicate)

- ACL (how agents exchange messages).

My group at CSELT put considerable efforts in the implementation of FIPA specifications. The most visible result of the effort, driven by Fabio Bellifemine, a key figure in FIPA since the early meetings, has been the development of the Java Agent Development Environment (JADE), an open source project using the Lesser General Public License (LGPL) license with a large community of users spanning the entire spectrum of communication industries, academia included. Started as an internal CSELT project, JADE was significantly enhanced thanks to the CEC-funded IST project LEAP. As a result of a huge collaborative effort, this project has provided a version of JADE suitable for memory-constrained devices such as the new generation cellular phones.

JADE can be utilised in all environments where complex tasks, whose execution depends on a large number of unpredictable events, have to be carried out. This may be the case of a large factory where there is the need to optimise the process of delivering spare parts to warehouses, from there to the assembly lines, and the dispatching of products from there to warehouses and from there to customers.

Another example is offered by the coordination of a large work force scattered on a geographical area, such as found in utilities. In Figure 3

- Customers report intervention needs to a call centre

- A team manager supervises the work force elements (field engineers)

- Agents located on mobile devices retrieve jobs and trade jobs and shifts, but also receive guidance from a route planning system and retrieve documentation

- The team manager’s role applies policies and checks progress instead of the tedious task of allocating jobs.

Fig. 3 – Work force coordination

What JADE enables is a straightforward implementation of Peer-to-Peer (P2P) communication. The client-server communication paradigm assumes that intelligence and resources are concentrated in the server which is just reactive to clients’ requests. Clients have little intelligence and resources but takes the initiative to request services from the server. The P2P reverses the client-server paradigm by making the peer capable of playing both roles.

This last case allows me to depict a realistic scenario, in which JADE lets a set of agents resident in (multiple) mobile communication devices transform the mobile device into the user’s alter ego. In Fig. 4 the agent possesses domain knowledge, learns from the user by examples, observes and learns how the user interacts with the device and can even request advice from its peers.

Fig. 4 – Devices as user’s alter ego

JADE allows the easy creation of communities driven by the deep understanding that personal agents can build on their masters by observing their actions. Gone would be the days when my alter ego was out of my control and in the hands of some SP. A key technology to practically implement this paradigm is clearly the ability to learn from user actions.

| Previous chapter | Next section | Next chapter | |

| ToC | Software And Communication | Technology Challenging Rights | More About Rights and Technologies |

| Previous chapter | Next chapter | |

| ToC |