ITU-T has promulgated a number of standards for digital transmission, starting from the foundational standard for digital representation of telephone speech at 64 kbit/s. This is actually a two-edged cornerstone because the standard specifies two different methods to digitise speech: A-law and µ-law. Both use the same sampling frequency (8 kHz) and the same number of bits/sample (8) but two different non-linear quantisation characteristics to take into account the logarithmic sensitivity of the ear to audio intensity. Broadly speaking, µ-law is used in North America and Japan and A-law is used in the rest of the world.

While any telephone subscriber can technically communicate with any other telephone subscriber in the world, there are differences on how the communication is actually set up. If the two subscribers wishing to communicate belong to the same local switch they are directly connected via that switch. If they belong to different switches, they are connected through a long-distance link where a number of telephone channels are “multiplexed” together. The size of the multiplexer depends on the likelihood that a subscriber belonging to switch A will want to connect to a subscriber belonging to switch B at the same time. This pattern is then repeated through a hierarchy of switches.

When telephony was analogue, multiplexers were implemented using a Frequency Division Multiplexing (FDM) technique where each telephone channel was assigned a 4 kHz slice. The hierarchical architecture of the network did not change with the use of digital techniques. The difference was in the technique used, Time Division Multiplexing (TDM) instead of FDM. In TDM a given period of time is assigned to the transmission of 8 bits of a sample of a telephone channel, followed by a sample of the next telephone channel, etc. Having made different choices at the starting point, Europe and USA (with Japan also asserting their difference with yet another multiplexing hierarchy) kept on doing so with the selection of different transmission multiplexers: the primary USA multiplexer has 24 speech channels – also called Time Slots (TS) – in the multiplexer, plus 8 kbit/s of other data for a total bitrate of 1,544 kbit/s. The primary European multiplexer has 30 speech channels plus two non-speech channels for a total bitrate of 2,048 kbit/s.

While both forms of multiplexing do the job they are expected to do, the structure of the 1,544 kbit/s multiplex is a bit clumsy, with 8 kbit/s inserted in an ad hoc fashion in the 1,536 kbit/s of the 24 TSs. Instead, the structure of the 2,048 kbit/s is cleaner because the zero-th TS (TS 0) carries synchronisation, the 16th TS (TS 16) is used for network signalling purposes and the remaining 30 TSs carry the speech channels. The American transmission hierarchy is 1.5/6/45 Mbit/s and the European hierarchy is 2/8/34/140 Mbit/s.

The decision to bifurcate was a deliberate decision of the European PTT Administrations, each having strong links with national manufacturers (at least two of them, as was the policy at that time, so as to retain a form of competition in procurement), who feared that, by adopting a worldwide standard for digital speech and multiplexing, their industries would succumb to the more advanced American manufacturing industry. These different communication standards notwithstanding, the ability of end-users to communicate is not affected because speech was digitised only for the purpose of core network transmission, while end-user devices continued to receive and transmit analogue speech.

The development of Group 3 facsimile was driven by the more enlightened approach of providing an End-To-End (E2E) interoperable solution. This is not surprising because the “service-oriented” portions of the telcos and related manufacturers, many of which were not typical telco manufacturers, were the driving force for this standard that sought to open up new services. The system is used even today, althought with a downward trend, after some 40 years of existence, with hundreds million devices sold.

The dispersed world of television standards found its unity again – sort of – when the CCIR approved Recommendations 601 and 656 related to digital television. Almost unexpectedly, agreement was found on a universal sampling frequency of 13.5 MHz for luminance and 6.75 MHz for the colour difference signals. By specifying a single sampling frequency, NTSC and PAL/SECAM can be represented by a bitstream with an integer number of samples per second, per frame and per line. The number of active samples is 720 for Y (luminance) lines and 360 for U and V (colour difference signals) lines. This format is also called 4:2:2 where the three numbers represent the ratio of the Y:U:V sampling frequencies. This sort of reunification, however, was achieved only in the studio where Recommendation 656 is mostly confined because 216 Mbit/s is a very high bitrate, even by today’s standard. Recommendations 601 and 656 are linked to Recommendation 657, the digital video recorder standard known as D1. While this format has not been very successful market-wise, it played a fundamental role in the picture coding community because it was the first device available on the market that enabled storage, exchange and display of video without the degradation introduced by analogue storage devices.

ITU-T also promulgated several standards for compressed speech and video. One of them is the 1.5/2 Mbit/s videoconference standard of the “H.100 series”, currently no longer in use, but nevertheless important because it was the first international standard for a digital audio-visual communication terminal. This transmission system was originally developed by a European collaborative project called COST 211 (COST stands for Collaboration Scientifique et Technique and 211 stands for area 2 – telecommunication, project no. 11) in which I represented Italy.

It was a remarkable achievement because the project designed a complete terminal capable of transmitting audio, video, facsimile and other data, including End-to-End signaling. The video coding, very primitive if seen with today’s eyes, was a full implementation of a DPCM-based Conditional Replenishment scheme. My group at CSELT developed one of the four prototypes, the other three being those of British Telecom, Deutsche Telekom and France Telecom (these three companies had different names at that time, because they were government-run monopolies). The prototypes were tested for interoperability using 2 Mbit/s links over communication satellites, another first. The CSELT prototype was an impressive two 6U racks made of Medium Scale Integration (MSI) and Large Scale Integration (LSI) circuits that even contained a specially-designed Very Large Scale Integration (VLSI) circuit for change detection that used the “advanced” – for the late 1970s – 4 µm geometry!

Videoconferencing, international by definition because it served the needs of business relations trying to replace long-distance travel with a less time-consuming alternative, was soon confronted with the need to deal with the incompatibilities of television standards and transmission rates that nationally-oriented policies had built over the years. Since COST 211 was a European project, the original development assumed that video was PAL and transmission rate was 2,048 kbit/s. The former assumption had an important impact on the design of the codec, starting from the size of the frame memory.

By the time the work was being completed, however, it was belatedly “realised” that the world had different television standards and different transmission systems. A solution was required, unless every videoconference rooms in the world was equipped with cameras and monitors of the same standard – PAL – clearly wishful thinking. Unification of video standards is something that had been achieved – for safety reasons – in the aerospace domain, where all television equipment is NTSC, but it was not something that would ever happen in the fragmented business world of telecommunication terminals and certainly not in the USA where even importing non-NTSC equipment was illegal at that time.

A solution was found by first making a television “standard conversion” between NTSC and PAL in the coding equipment, then use the PAL-based digital compression system as originally developed by COST 211, and output the signal in PAL, if that was the standard used at the receiving end, or make one more standard conversion in output, if the receiving end was NTSC. Extension to operate at 1.5 Mbit/s was easier to achieve because the transmission buffer just tended to fill up more quickly than with a 2 Mbit/s channel, but the internal logic remained unaltered.

At the end of the project, the COST 211 group realised that there were better performing algorithms – linear transformation with motion compensation – than the relatively simple but underperforming DPCM-based Conditional Replenishment scheme used by COST 211. This was quite a gratification for a person who had worked on transform coding soon after being hired and had always shunned DPCM as an intraframe video compression technology without a future in mass communication. Instead of doing the work as a European project and then trying to “sell” the results to the international ITU-T environment – not an easy task, as had been discovered with H.100 – the decision was made to do the work in a similar competitive/collaborative environment as COST 211 but within the international arena as a CCITT “Specialists Group”. This was the beginning of the development work that eventually gave rise to the ITU-T Recommendation H.261 for video coding at px64 kbit/s (p=1,…, 30). An extension of COST 211, called COST 211 bis, continued to play a “coordination” role of European participation in the Specialists Group.

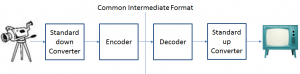

The first problem that the Chairman Sakae Okubo, then with NTT, had to resolve was again the difference in television standards. The group decided to adopt an extension of the COST 211 approach, i.e. conversion of the input video signal to a new “digital television standard”, with the number of lines of PAL and the number of frame/second of NTSC – using the commendable principle of “burden sharing” between the two main television formats. The resulting signal, digitised and suitably subsampled to 288 lines of 352 samples at a frame rate of 29.97 Hz, would then undergo the digital compression process that was later specified by H.261. The number 352 was derived from 360, ½ the 720 pixels of digital television, but with a reduced number of pixels per line because it had to be divisible by 16, a number required by the block-coding algorithm of H.261.

I was part of that decision made at the Turin meeting in 1984. At that time I had already become hyper-sensitive to the differences in television standards. I thought that the troubles created by the divisive approach followed by our forefathers had taught a clear enough lesson and the world did not need yet another, no matter how well-intentioned, television standard, even if it was confined inside the digital machine that encoded and decoded video signals. I was left alone and the committee went on approving what was called “Common Intermediate Format” (CIF). This regrettable decision set aside, H.261 remains the first example of truly international collaboration in the development of a technically very complex video compression standard.

Figure 1 – The Common Intermediate Format

The “Okubo group”, as it was soon called, made a thorough investigation of the best video coding technologies and assembled a reasonably performing video coding system for bitrates, like 64 kbit/s, that were once considered unattainable (even though some prototypes based on proprietary solutions had already been shown before). The H.261 algorithm can be considered as the progenitor of most video coding algorithms commonly in use today, even though the equipment built against this standard was not particularly successful in the market place. The reason is that the electronics required to perform the complex calculations made the terminals bulky and expensive. No one dared make the large investments needed to manufacture integrated circuits that would have reduced the size of the terminal and hence the cost (the rule of thumb used to be that the cost of electronics is proportional to its volume). The high cost made the videoconference terminal a device centrally administered in companies, thus discouraging impulse users. Then the video quality when using H.261 at about 100 kbit/s, the bitrate remaining when using 2 Integrated Service Digital Network (ISDN) time slots after subtracting the bitrate required by audio (when this was actually compressed) and other ancillary signals, was far from satisfactory for business users, since consumers had already been scared away by the price of the device. Lastly there remains the still unanswered question: do people really wish or need to see the face of the person they are talking to over the telephone on a video screen?