Starting from Video, MPEG has gradually covered most of the media and related spectrum:

- Video

- Audio

- Systems technologies holding the two together, the interfaces with transport and, in the case of MPEG-2, the transport itself

- 3D scenes creation with such “objects” as 2D and 3D graphics, synthetic audio and their composition

- Media metadata including the capability to detect the existence of a specified audio or video object (MPEG-7 and in particular CDVA)

- Reference model and the data formats for interaction with real and virtual worlds (MPEG-V)

- A standard for user interfaces (MPEG-U).

So MPEG is well placed to deal with two areas that have achieved notoriety and some market traction: Virtual Reality (VR) and Augmented Reality (AR). But first we need to say something about what is meant by these words

- Virtual reality is an environment created by a computer and presented to the user in a way that is more realistic to the extent that more intelligent software, more powerful computer and more engaging presentation technologies are used to involve more human senses obviously starting from sight and sound

- Augmented reality is an environment where computer-generated image or video is superimposed on a view of the real world and the result is presented to the user.

A simple example of Augmented Reality is provided by Figure 1.

Figure 1 – A simple example of Augmented Reality

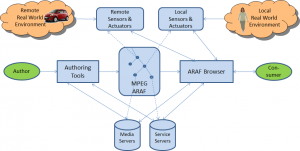

Augmented Reality Application Format (ARAF) defines a format that includes the following elements

- Elements of scene description suitable to represent AR content

- Mechanisms for a client to connect to local and remote sensors and actuators

- Mechanisms to integrate compressed media (image, audio, video, graphics)

- Mechanisms to connect to remote resources such as maps and compressed media

Figure 1 illustrates the scope of the standard.

Figure 2 – Scope of the ARAF standard

From the left we have an author of the ARAF file who uses an ARAF Authoring Tool to define local interactions, access to media and service server, but also define what should happen when a person crosses a localcamera or a car crosses a remote camera.